This page was built using the Academic Project Page Template.

This website is licensed under a Creative

Commons Attribution-ShareAlike 4.0 International License.

Marianna Nezhurina, Tomer Porian, Giovanni Pucceti, Tommie Kerssies, Romain Beaumont, Mehdi Cherti, Jenia Jitsev

NeurIPS 2025

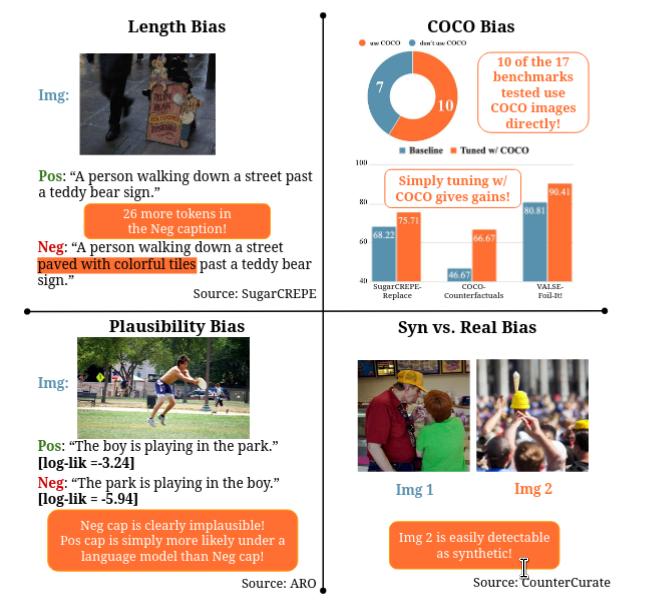

Vishaal Udandarao, Mehdi Cherti, Shyamgopal Karthik, Jenia Jitsev, Samuel Albanie, Matthias Bethge

Short version: Eval-FoMo 2 CVPR 2025 Workshop

Marianna Nezhurina, Lucia Cipolina-Kun, Mehdi Cherti, Jenia Jitsev

Preprint (2024)

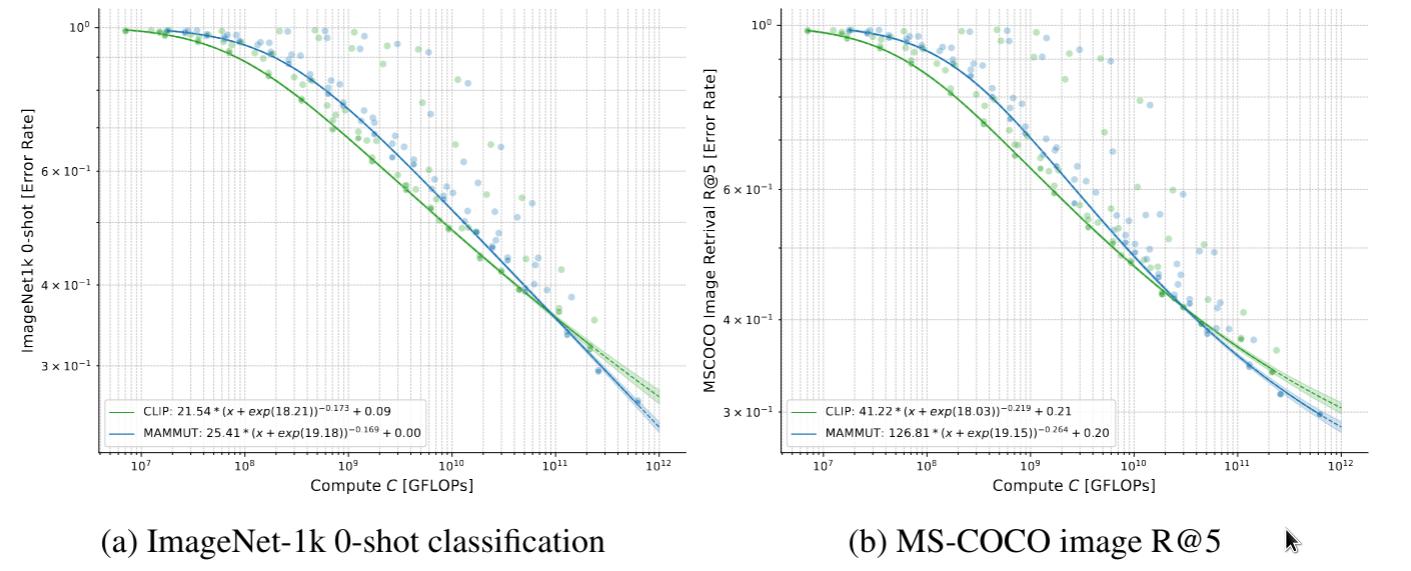

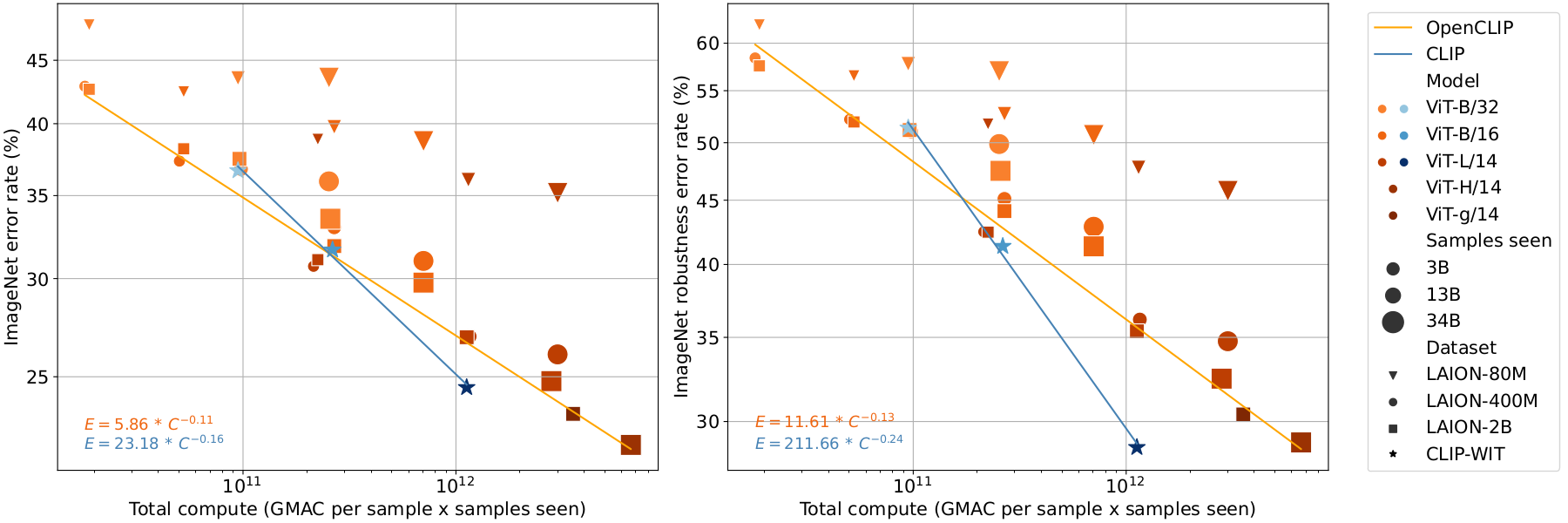

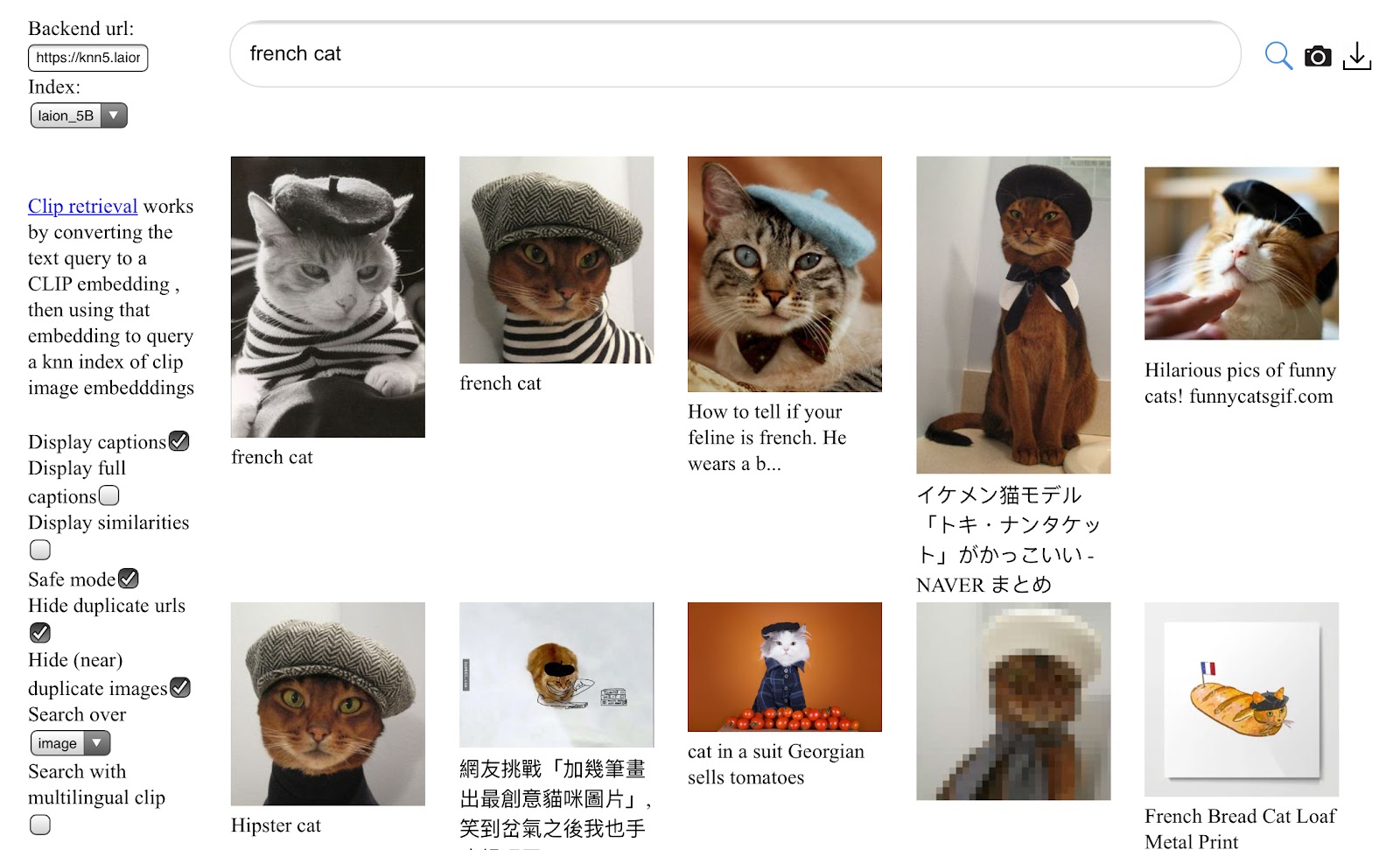

Mehdi Cherti, Romain Beaumon, Ross Wightman, Mitchell Wortsman, Gabriel Ilharco, Cade Gordon, Christoph Schuhmann, Ludwig Schmidt, Jenia Jitsev

CVPR 2023

Christoph Schuhmann, Romain Beaumont, Richard Vencu, Cade Gordon, Ross Wightman, Mehdi Cherti, Theo Coombes, Aarush Katta, Clayton Mullis, Mitchell Wortsman, Patrick Schramowski, Srivatsa Kundurthy, Katherine Crowson, Ludwig Schmidt, Robert Kaczmarczyk, Jenia Jitsev

NeurIPS 2022 Datasets and Benchmarks track (Outstanding paper award)

OpenReview / Poster / Video

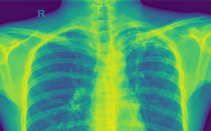

Mehdi Cherti, Jenia Jitsev

Short version: Medical Imaging Meets NeurIPS 2021 Workshop

Long version: IJCNN 2021

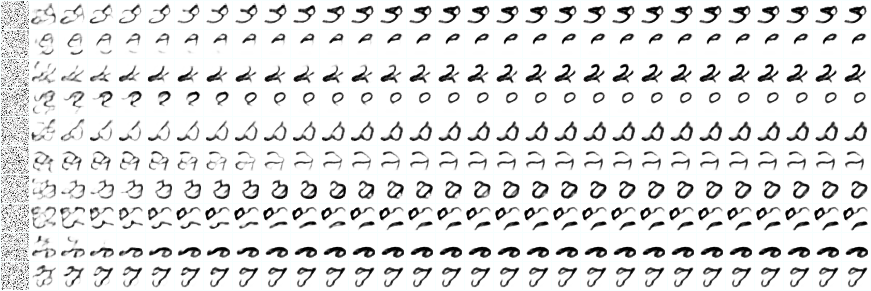

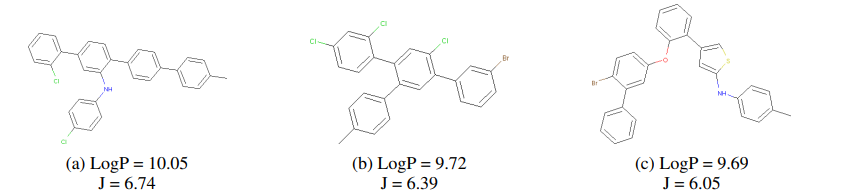

Mehdi Cherti, Balázs Kégl, Akın Kazakçı

ICLR 2017 workshop

This page was built using the Academic Project Page Template.

This website is licensed under a Creative

Commons Attribution-ShareAlike 4.0 International License.